-

The question of how much compute it takes to replace humans in the workforce is unnecessarily reductive. Creating efficiencies does not guarantee humans will prefer a fully automated solution. More so, it does not mean it can’t be outcompeted by an automated solution wielded by a well-networked human. While AI will likely be able to

-

At this year’s OpenAI Dev Day, I think we saw the next chapter of software unfold. The big headline wasn’t just about new models—it was about an entirely new way of building and interacting with apps. OpenAI’s introduction of AppKit hints at a world where chat itself becomes the operating system. During my time at OpenAI there

-

Seventy-two hours ago, OpenAI launched Sora, an invite-only app that has already climbed to the top of Apple’s App Store. In just three days, it’s changed how I—and a lot of others—spend time online. Speaking personally, I’ve logged more hours inside Sora this week than I’ve spent on TikTok across my entire life. More than Instagram

-

For the latest episode of the OpenAI Podcast I sat down with OpenAI president and co-founder Greg Brockman and Code engineering lead Thibault Sottiaux to talk about the release of OpenAI’s new GPT-5-Codex model.

-

With GPT-4 now stepping back from its starring role in ChatGPT, I want to share a few of my favorite memories from its launch. I originally joined OpenAI as an engineer on the Applied team, but later moved into a hybrid role as OpenAI’s “science communicator.” That shift let me dive deep into technical work

-

In this video, we delve into the concept of AI hallucinations by comparing them to human cognitive errors. We explore how both humans and AI can make false statements due to incorrect information or reasoning errors. The video explains different scenarios where AI, like humans, can generate incorrect conclusions based on faulty data or assumptions.

-

tldr: Yes. A recent paper, GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models, makes the claim that their new benchmark demonstrates that state of the art reasoning models have critical limitations when it comes to reasoning. Putting aside the fact that they grouped together tiny 2.7 B parameter models (that could fit

-

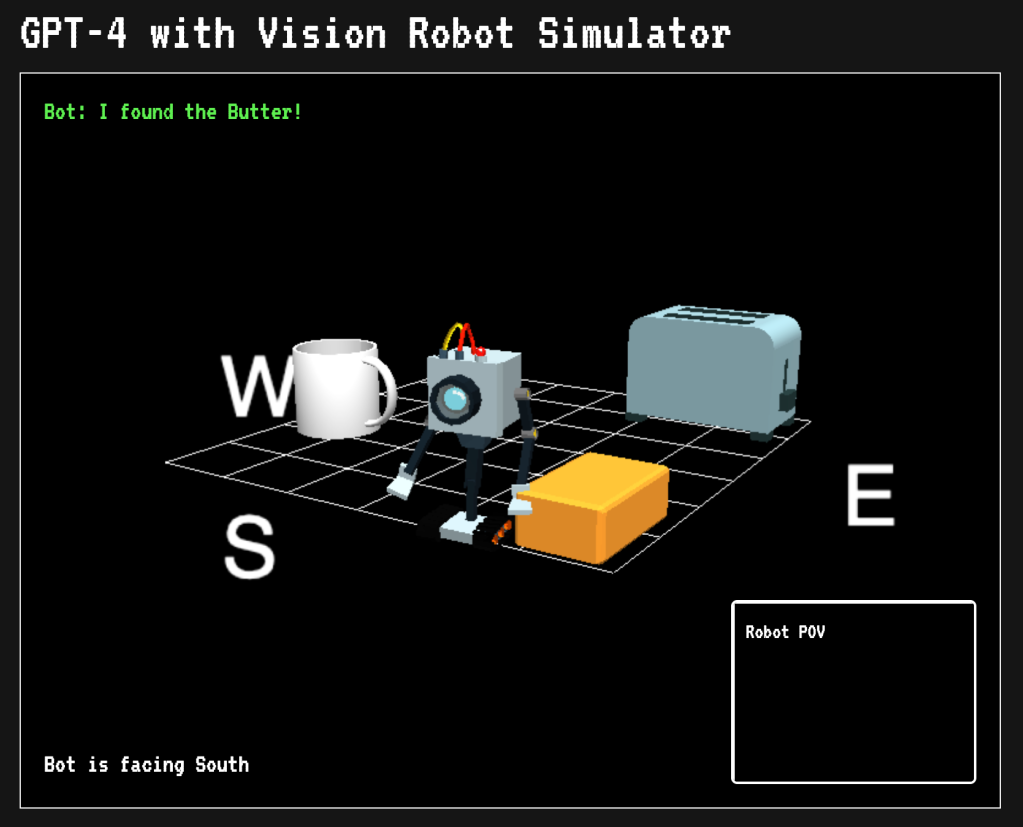

TLDR: There are multiple ways you can use GPT-4 with Vision to power robotics and other systems. I included several sample apps you can download to experiment with including a robot simulator. Multimodal AI models like GPT-4 with Vision have allowed for entirely new kinds of applications that go far beyond just text comprehension. A

-

TLDR: How to boost GPT-4 with Vision’s capabilities with a simple prompt addition. A recent paper How Far Are We from Intelligent Visual Deductive Reasoning? points out the limitations in visual reasoning in image models like GPT-4V. Like other related papers, I think the investigators are directionally correct (these models don’t have human-level reasoning and

-

A recent paper The Reversal Curse points out an apparent failure in large large language models like GPT-4. From the abstract: We expose a surprising failure of generalization in auto-regressive large language models (LLMs). If a model is trained on a sentence of the form “A is B”, it will not automatically generalize to the reverse direction

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.